Routh- Hurwitz Criterion | Stable System | marginally stable | Unstable system | control system

Routh- Hurwitz Criterion:

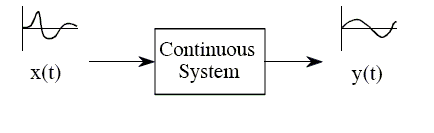

Stable System:

If all the roots of the characteristic equation lie on the left half of the 'S' plane then the system is said to be a stable system.

Marginally Stable System:

If all the roots of the system lie on the imaginary axis of the 'S' plane then the system is said to be marginally stable.

Unstable System:

If all the roots of the system lie on the right half of the 'S' plane then the system is said to be an unstable system.

Statement of Routh-Hurwitz Criterion:

Routh Hurwitz criterion states that any system can be stable if and only if all the roots of the first column have the same sign and if it does not has the same sign or there is a sign change then the number of sign changes in the first column is equal to the number of roots of the characteristic equation in the right half of the s-plane i.e. equals to the number of roots with positive real parts.

Necessary but not sufficient conditions for Stability:

We have to follow some conditions to make any system stable, or we can say that there are some necessary conditions to make the system stable.

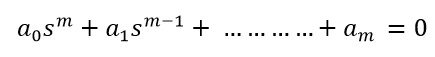

Consider a system with characteristic equation:

1. All the coefficients of the equation should have the same sign.

2. There should be no missing term.

If all the coefficients have the same sign and there are no missing terms, we have no guarantee that the system will be stable. For this, we use Routh Hurwitz Criterion to check the stability of the system. If the above-given conditions are not satisfied, then the system is said to be unstable. This criterion is given by A. Hurwitz and E.J. Routh.

Advantages of Routh- Hurwitz Criterion:

1. We can find the stability of the system without solving the equation.

2. We can easily determine the relative stability of the system.

3. By this method, we can determine the range of K for stability.

4. By this method, we can also determine the point of intersection for root locus with an imaginary axis.

Limitations of Routh- Hurwitz Criterion:

1. This criterion is applicable only for a linear system.

2. It does not provide the exact location of poles on the right and left half of the S plane.

3. In case of the characteristic equation, it is valid only for real coefficients.

The Routh- Hurwitz Criterion:

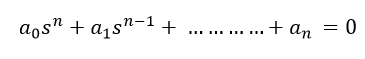

Consider the following characteristic Polynomial

When the coefficients a0, a1, ......................an are all of the same sign, and none is zero.

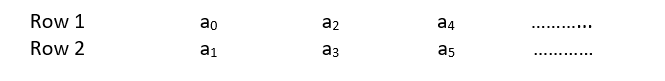

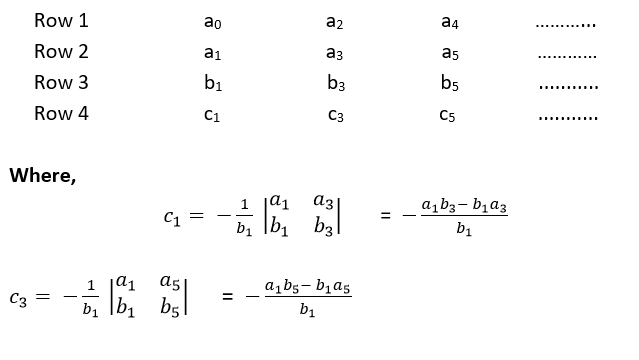

Step 1: Arrange all the coefficients of the above equation in two rows:

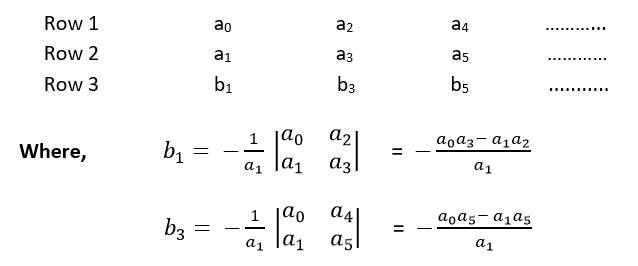

Step 2: From these two rows we will form the third row:

Step 3: Now, we shall form fourth row by using second and third row:

Step 4: We shall continue this procedure of forming a new rows:

Example

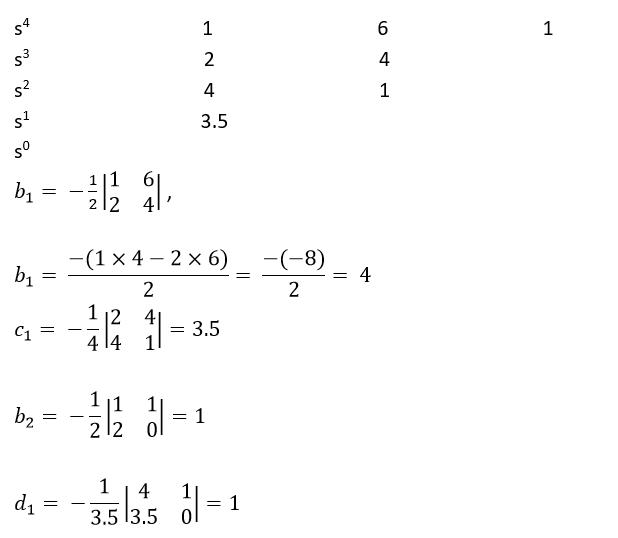

Check the stability of the system whose characteristic equation is given by

s⁴ + 2s³+6s²+4s+1 = 0

Solution

Obtain the arrow of coefficients as follows

Since all the coefficients in the first column are of the same sign, i.e., positive, the given equation has no roots with positive real parts; therefore, the system is said to be stable.

Labels: Control System, electronics engineering, marginally stable, Routh- Hurwitz Criterion, Stable System, Unstable system